Robust Mobile Banknote Classification & Monetary Conversion [SUMMER 2013]

Image Processing & Machine Learning Project

Robust Mobile Banknote Classification

Classifying 14 Bills At Once

Algorithm Is Robust To Overlap

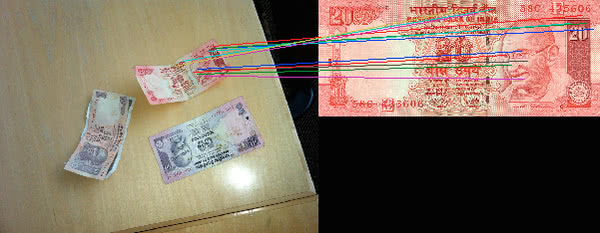

Image To Database Feature Map

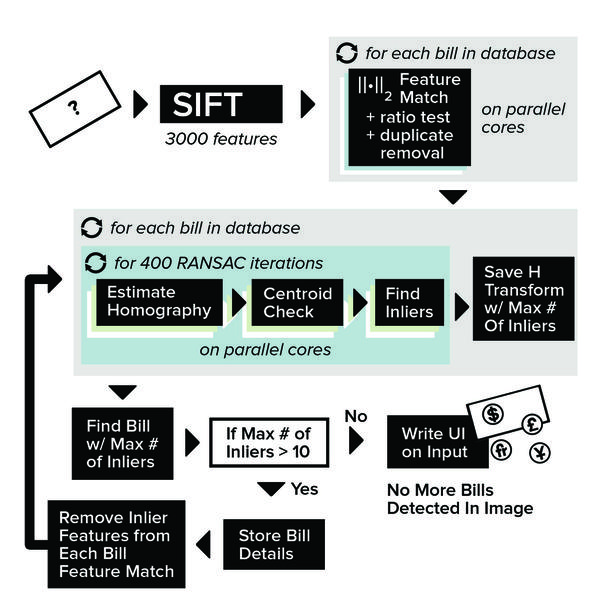

Feature Extraction Process

Overview

For this project, I built a mobile system capable of recognizing multiple foreign banknotes with varying levels of occlusion. The system uses a client-server architecture, in which the mobile device sends an image potentially containing banknotes to the server. This server applies the note recognition algorithm to find and process any banknotes in the image and then sends the image back to the mobile device with additional information superimposed on the image. This information includes detailed banknote positions, countries of origin, and value in both local currency and USD. The bill recognition algorithm utilizes SIFT and RANSAC to detect banknotes in a variety of orientations, scales, and settings.

Prior to implementing a mobile banknote recognition system, a database of banknote images with which the mobile images will be compared must be assembled. Under the pretense that comparisons will be performed on keypoint feature descriptors, banknotes amassed for the database are first individually scanned into a computer and then pre- processed by applying SIFT to each side of the banknote separately. By applying a peak threshold of 0.5, SIFT will produce several thousand keypoints per image, of which only the top five hundred will be kept for computational efficiency during comparisons. The feature descriptors of these keypoints, along with the banknote country of origin, the side of the banknote imaged (front or back), and the local value of the banknote are stored in the database.

With our Android application loaded onto and running on a user’s camera-equipped, mobile device, the user initiates the process of identifying and converting foreign currency into United States Dollars (USD) by taking a minimally-blurred photograph of the banknotes in question. This image is then uploaded to a remote server via the device’s wireless data service, predominantly Wi-Fi.

The exact method of image feature extraction, geometry consistency checks and sever-client communication is discussed in the paper and poster below.